From lab to market, understand why Google, IBM, Microsoft, and NVIDIA are accelerating a revolution that will impact your business

In 1981, Richard Feynman questioned why researchers

were trying to simulate Quantum Physics on classical computers if nature itself is quantum. From this insight was born the

idea of building computers that use the rules of Quantum Mechanics directly to solve problems that, for traditional machines,

would be impossible or economically unfeasible.

For decades, Quantum Computing was a topic for scientific

conferences, not board meetings. It remained restricted to papers, laboratories, and a small group of specialists scattered

around the world. It was seen as something “for many decades from now.”

Fast-forward to today. In one of his keynotes, Jensen

Huang, NVIDIA’s CEO, gives a straightforward summary: hardly anyone talked about Quantum Computing outside Academia;

results were essentially experimental. Now, the vocabulary has changed. Words like product, platform, partnership, and investment

have entered the scene. Major players (Google, IBM, Microsoft, and NVIDIA itself) have turned a research field into a commercial

race. When companies of this size align brand, budget, and roadmap around the same technology, the message to the market is

clear: it is no longer science fiction; it is a transition already underway.

From theory to practice: the evolution of Quantum

Computing

This history can be summarized in a few milestones.

In the 1980s, Feynman planted the seed by proposing that quantum systems be used to simulate quantum phenomena. In the following

decades, the 1990s and 2000s, algorithms emerged that showcased the potential of this model, such as Shor’s algorithm,

capable of factoring large numbers much more efficiently than any known classical method, and Grover’s algorithm, which

speeds up searches in databases. It was still all theoretical, but there was already a huge promise embedded in it.

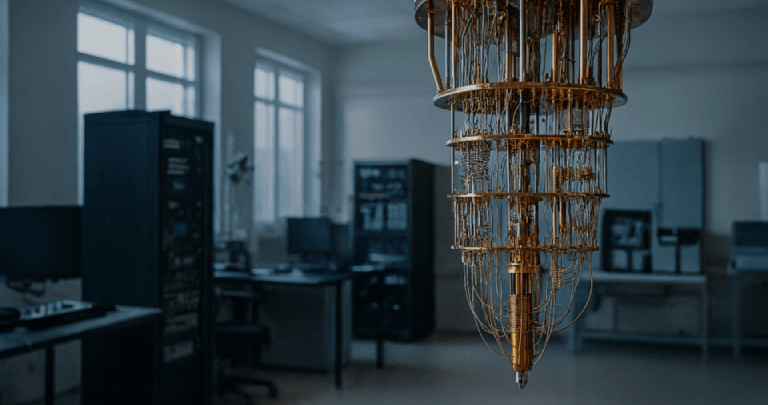

From 2010 onwards, we began to see the first accessible

devices. Cloud platforms allowed researchers and companies to experiment on real quantum hardware. IBM, for instance, made

remote access to its first quantum computers available, opening up the possibility of testing algorithms and applications

without having to set up an entire lab.

Between 2020 and 2025, the curve steepened. We entered

the phase in which Quantum Computing ceases to be just a “paper topic” and starts appearing in product presentations,

investor pitches, and big techs’ long-term strategies. This is the moment when qubits (quantum bits, that is, the basic

unit of information in quantum computing, and quantum circuits start sharing space with KPIs, ROI, and technology roadmaps.

The role of the giants: Google, IBM, Microsoft,

and NVIDIA

Google grabbed the spotlight when it announced that

its Sycamore quantum processor, with 53 qubits, had performed a specific task in about 200 seconds, something which, according

to the company itself, would take thousands of years on a classical supercomputer. The term “quantum supremacy”

generated debate, but one conclusion became clear: there are already very specific problems in which a quantum computer outperforms

any known classical machine. For the corporate world, this simultaneously lights up two signals: opportunity (new ways to

simulate, optimize, and analyze data) and risk (falling behind in modeling and innovation capabilities).

IBM took a complementary path, treating Quantum

Computing as a product from early on. By announcing processors with more than 1,000 qubits, such as Condor, the company goes

beyond the absolute number of qubits and presents a public roadmap, speaking openly about quantum-centric supercomputers that

combine quantum hardware, software, cloud services, and well-defined use cases. Finance, chemistry, logistics, and energy

are some of the sectors already mapped in its materials and demos. The message is: there is a plan, not just a lab experiment.

Microsoft, in turn, is betting on topological qubits,

a type of qubit designed to be more resistant to noise and, therefore, better suited for scalability. The announcement of

chips with “topological cores” and the vision of reaching millions of qubits on a single chip reveal a long-term

ambition. There is skepticism in the scientific community, and plenty of discussion about the extent to which marketing gets

ahead of technical reality. Even so, the investment is concrete: expansion of labs, partnerships with research centers, and

clear integration with the Azure ecosystem, preparing the environment for quantum workloads as soon as the technology is ready

for production.

NVIDIA has chosen a different strategic position.

Instead of competing directly to have the “largest quantum computer,” the company wants to be the glue between

Quantum Computing, AI, and High-Performance Computing. With the CUDA-Q platform, it offers an environment where developers

can orchestrate CPUs, GPUs, and QPUs in the same workflow. In practice, it becomes possible to write applications where part

of the workload runs on GPUs (simulation, AI, preprocessing) and another specific part is sent to a quantum processor. Adding

in specialized interconnects and partnerships with quantum companies, NVIDIA positions itself as the standard infrastructure

for a hybrid future in which different types of processors work together.

What is already possible to do with Quantum Computing

today

If you are a technology or business decision-maker,

it is natural to ask what can be done in concrete terms right now. It is important to be honest: most of today’s equipment

is in the NISQ phase (Noisy Intermediate-Scale Quantum), which means they have tens or hundreds of qubits that are still quite

susceptible to noise. Even so, several application fronts are already being tested.

In the field of optimization, problems such as fleet

routing, production planning, and resource allocation are being studied with quantum algorithms or algorithms that use quantum

concepts, often combined with Artificial Intelligence techniques. In chemistry and materials science, simulations of complex

molecules and advanced structures are natural candidates for quantum advantage, with impact on pharmaceuticals, energy, fertilizers,

batteries, and more. In the financial sector, there is research on risk modeling, derivatives pricing, and portfolio optimization

using hybrid approaches. More recently, experiments have begun to appear in Quantum Machine Learning (QML), which use quantum

circuits as building blocks in AI models.

In practice, most of this is still at the R&D

stage: pilot projects, proofs of concept, and collaborations between technology companies, universities, and players in strategic

sectors. The crucial point is that organizations that start experimenting now gain a stock of knowledge that is hard to copy

later.

Security and cryptography in the post-quantum

world

Another point that concerns executives and technology

teams is security. Quantum algorithms such as Shor’s have the potential to break cryptographic schemes widely used today,

such as RSA and ECC, provided that large and reliable quantum computers exist. This means that the discussion on post-quantum

security is no longer theoretical; it has become strategic.

Standardization bodies such as NIST (National Institute

of Standards and Technology) have already selected post-quantum cryptography algorithms, such as CRYSTALS-Kyber for key exchange

and CRYSTALS-Dilithium, FALCON, and SPHINCS+ for digital signatures. At the same time, concern is growing over the “harvest

now, decrypt later” scenario, in which data encrypted today is stored to be decrypted in the future, when Quantum Computing

reaches maturity.

If your organization deals with sensitive information

of long duration (health data, government records, intellectual property, long-term contracts, trade secrets), the migration

to post-quantum algorithms is not a topic to leave for “later.” It needs to enter the security roadmap now, alongside

other digital transformation initiatives.

What this means for your company today

The good news is that you do not need to buy a quantum

computer tomorrow morning. The bad news is that, if nothing is done, it is possible to fall behind precisely when this technology

begins to deliver practical advantages. The key is to act on three fronts: risk, opportunity, and preparation.

First, it is essential to understand your business’s

risk and opportunity horizon. For how long do your data need to remain confidential? If the answer is measured in decades,

post-quantum cryptography immediately enters the conversation. Does your business model depend on complex optimization, sophisticated

simulations, or pattern detection? In that case, Quantum Computing is not just a threat; it becomes a concrete opportunity

for competitive advantage.

Next, it is worth starting small, but starting.

Running code on a physical quantum computer is not necessary; high-performance simulators, often GPU-based, already allow

you to test algorithms, understand limitations, adapt teams, and map relevant use cases. A well-defined pilot project, even

if modest, usually generates more real learning than years of distant observation.

Finally, it is essential to strengthen your technological

foundation. Quantum Computing will not replace legacy systems all at once; it will connect to them. This requires well-governed

data, modern API-oriented architectures, strategic use of cloud, and, above all, maturity in Artificial Intelligence. The

most promising applications of the future tend to be hybrid, combining AI, supercomputing, and quantum accelerators in the

same workflow.

How Visionnaire can support your journey in this

new landscape

At Visionnaire, we have been following for decades

the evolution of technologies that initially seemed distant from business reality, but today are part of companies’

daily routines, such as AI itself. With Quantum Computing, it will be no different. More important than “programming

qubits” right now is building the foundations so that your organization is ready when this technology enters the stage

of large-scale “useful use.”

We can help your company assess maturity in data,

AI, and systems architecture, identifying whether you are ready to integrate emerging technologies. We also support the construction

of a realistic technology roadmap that considers topics such as post-quantum cryptography, generative AI, GPU acceleration,

and, further ahead, integrations with quantum platforms. And, of course, we operate as a Software and AI Factory to develop

tailor-made solutions, already optimized for high performance, simulation, and advanced algorithms.

You do not need to become a Quantum Computing expert

to make good decisions today. What you do need is to decide whether you want to be a spectator or a protagonist in the next

major wave of technological transformation. If your intention is to lead, the next step is simple: talk

to Visionnaire and start designing, right now, the strategy that will position your company in the post-quantum world.